- The Fraudfather's Dead Drop

- Posts

- Dead People Are Making Car Payments: Inside the $35 Billion Identity Factory

Dead People Are Making Car Payments: Inside the $35 Billion Identity Factory

Why 1 in 114 Loan Applications Come From People Who Don't Exist

Children's SSNs are 51 times more likely to be used in synthetic identity theft, a number that should haunt every parent reading this. Criminals target children because they won't check their credit for years, giving fraudsters a decade-plus runway to build legitimate credit histories.

The $35 Billion Ghost Network: America's Invisible Identity Crisis

GM, Welcome Back to the Dead Drop

Five years ago, I received a call from a credit union in Tampa that still gives me pause to think about. "We have a problem," the fraud director said. "We've been lending money to at least 153 dead people for two years, and they've been making payments."

That investigation led me into the most sophisticated international criminal networks I've encountered in twenty years of financial crimes work. What we discovered wasn't traditional identity theft; it was something far more insidious. Criminals weren't stealing identities. They were manufacturing them from scratch, creating an entire phantom economy worth $35 billion that most financial institutions don't even know exists.

The Case That Changed Everything

The Tampa case started simple enough. A routine audit flagged accounts where Social Security numbers belonged to deceased individuals, but the accounts showed regular payment activity. When we dug deeper, we found something unprecedented: these weren't isolated incidents. They were part of a coordinated operation where criminals had created over 160,000 synthetic identities across the automotive lending sector alone.

The masterpiece wasn't the volume; it was the patience. Fraudsters "nurture" their synthetic accounts by making legitimate payments for a period of time to build positive credit history, sometimes for years, before executing what we call the "bust-out, " maxing out all available credit and vanishing.

Here's what shook me: this charge-off, on average, amounts to $15,000. But in automotive lending, we were seeing individual synthetic identities walking away with luxury vehicles worth $50,000 to $100,000.

Inside the Criminal Architecture

During my investigation, I learned something that fundamentally changed how I view financial fraud. Synthetic identity fraud has emerged as the fastest-growing form of financial crime, surpassing traditional credit card fraud and identity theft. But unlike every other fraud I've investigated, this one has no traditional victims to report the crime.

When someone steals your identity, you fight back. When someone creates a ghost identity, who calls the police?

The criminal methodology is ruthlessly elegant. Children's SSNs are 51 times more likely to be used in synthetic identity theft, a number that should haunt every parent reading this. Criminals target children because they won't check their credit for years, giving fraudsters a decade-plus runway to build legitimate credit histories.

But children aren't the only targets. Fraudsters typically begin by obtaining authentic personal data, often focusing on Social Security Numbers (SSNs) from vulnerable groups such as children, the elderly or homeless individuals, people unlikely to monitor their credit or challenge discrepancies.

The Numbers Behind the Nightmare

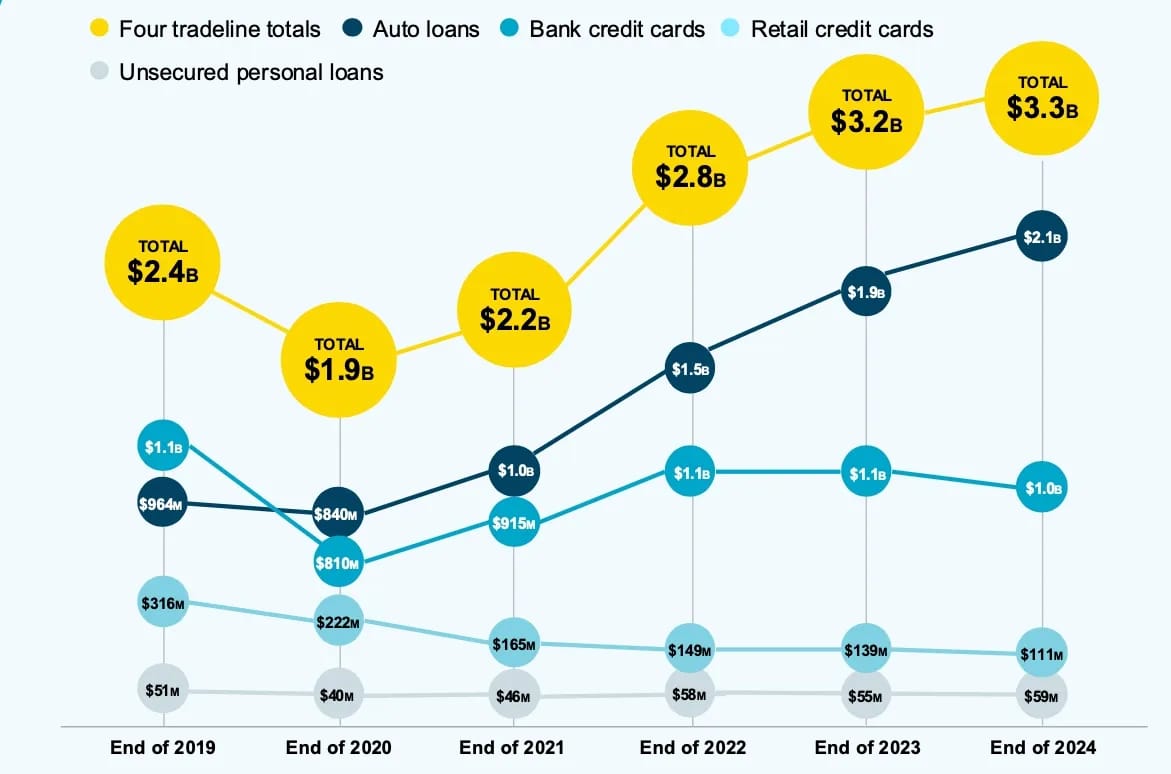

The scale of this operation defies comprehension. The total financial risk for financial lenders in the United States rose to $3.3 billion in 2024, up from $1.9 billion in 2020, driven by a concentration of fraud leveled against lenders in the automotive sector.

The estimated impact of synthetic identity fraud on lenders has grown since the pandemic to $3.3 billion. Source: TransUnion

But that's just what we can measure. Synthetic fraud losses are often reported as charge-offs due to bad debt, so overall estimates vary widely. Most organizations never realize they've been victimized.

The automotive sector became ground zero for a simple reason: profitability. Auto lenders are most exposed to synthetic fraud, with losses in the first half of 2024 totaling $2 billion; double that of bank credit cards. The synthetic identity attack rate hit 88 basis points in 2024, meaning 1 out of every 114 applications involves a fake identity.

AI: The Criminal Force Multiplier

What transformed this from a sophisticated scam into a $35 billion industry? Artificial intelligence.

An analysis of criminal Telegram channels revealed a 644% increase in conversations about AI and deepfakes used for fraud between 2023 and 2024. Criminals now have access to AI-powered identity generators, deepfake videos for bypassing identity verification, and AI-generated counterfeit identification documents.

The technical sophistication is staggering. Gen AI can make fake identities appear legitimate by, for instance, creating records of synthetic parents. It can learn from its mistakes and churn out more of what works.

Telegram has helped make fraud-as-a-service capabilities easily available for as little as $150. But the real money is in what criminals call "premade CPNs," a term that should alarm every financial institution executive.

CPN stands for "Credit Privacy Number," marketed by criminals as a legitimate alternative to Social Security Numbers for creating secondary credit profiles. Here's the reality: CPNs are nothing more than stolen Social Security Numbers, often from children or deceased individuals, rebranded and sold by criminal networks. When used to deceive lenders, they're completely illegal.

The criminal pricing structure reveals the sophistication of this market. Standard CPNs, freshly stolen SSNs with basic fake information, sell for $80 to $100. But "premade CPNs" are the premium product: template fake credit profiles created with stolen SSNs and then carefully aged for three years, complete with established payment histories and credit scores. These are fetching $750 or more because they can immediately qualify for significant credit lines.

The Geography of Ghosts

During my analysis of synthetic identity patterns, certain geographic concentrations emerged that tell a disturbing story. Houston remained the top city for synthetic identity fraud, followed by Chicago and Miami. Austin, Texas, showed the fastest year-over-year growth at 45%, while Tampa, Florida, had the highest overall synthetic fraud rate at 2.09% of applications.

These aren't random patterns. They represent organized criminal networks with sophisticated geographic distribution strategies. In Florida alone, we uncovered what investigators dubbed the "South Beach Bust Out Syndicate," submitted more than $10 million in fraudulent applications for high-end vehicles such as BMW and Mercedes. The syndicate inflated incomes to an average of $284,000, often using fake transportation-related LLCs as employers.

Detection: Finding Ghosts Among the Living

Here's what two decades of financial investigations taught me: criminals always leave patterns, even when they think they don't.

Synthetic identities have one fatal flaw; they're too perfect. Every synthetic profile analyzed by TransUnion shared one universal trait: no record of open bankruptcies. Real people have financial struggles; synthetic identities are engineered to appear flawless.

The deeper patterns are even more revealing. 39% of synthetic identities are linked to no relatives, which is about 5.2 times higher than the real population. Real people have messy lives, e.g. traffic tickets, utility bills, family connections, years of digital footprints across multiple platforms.

The absence of such "living characteristics" often points to synthetic identities. For instance, lacking relatives or motor vehicle records can make an identity up to seven times more likely to be fraudulent.

The Counter-Intelligence War

Financial institutions are fighting back, but they're facing an arms race against criminals with AI assistance. "They are using the same tools that we are using to create a better mouse trap to catch these fraudsters. They can go to the cloud, and they can go use an AI model, and then they have access to the same tooling that we do".

The good news? Auto lenders effectively prevent quite a bit of synthetic identity fraud, blocking $12 billion in fraudulent applications in 2023, while only $1.8 billion went through. This 87% prevention rate demonstrates that lenders are pretty effective systems.

The bad news? The occurrence rate of synthetics is rising so rapidly that even though only 13% of synthetics are getting through the current controls, it is still causing a high rate of overall losses.

The Institutional Blindness Problem

Here's what keeps me awake: Just 25% of financial service companies feel confident in addressing the threat posed by synthetic identity fraud, and just 23% in dealing successfully with AI and deepfake fraud.

We're facing an epidemic, and most institutions don't even know they're infected. Synthetic fraud losses are often reported as charge-offs due to bad debt, so overall estimates vary widely. When a synthetic identity defaults, it gets written off as a bad loan, not identified as fraud.

This institutional blindness is creating a feedback loop where the true scope of the problem remains hidden, preventing the industry-wide response needed to combat it effectively.

The Fraudfather's Tactical Assessment

Twenty years investigating financial crimes taught me to distinguish between evolving tactics and fundamental paradigm shifts. Synthetic identity fraud represents the latter; a complete transformation of how criminals approach financial systems.

Immediate Intelligence Priorities:

Deploy public record cross-referencing for all applications over $25,000

Implement behavioral pattern analysis beyond traditional credit metrics

Establish consortium data sharing for synthetic identity intelligence

Create mandatory verification delays for "perfect" credit profiles

Strategic Defensive Evolution:

Develop AI-powered detection systems that analyze digital footprint depth, not just breadth

Build predictive models that identify synthetic identities before they establish credit

Create industry-wide synthetic identity reporting standards

Implement blockchain-based identity verification for high-value transactions

The Economic Reality Check: This type of fraud is expected to generate at least US $23 billion in losses by 2030. The global cost of identity fraud is projected to exceed $50 billion in 2025. We're not dealing with a crime wave. We're facing a criminal industry that rivals legitimate sectors in sophistication and revenue.

The Fraudfather Bottom Line

The $35 billion ghost network isn't coming; it's here. Synthetic identity fraud continues to expand, and losses from it continue to increase: They crossed the $35 billion mark in 2023. While you've been building defenses against traditional fraud, criminals have been building an entirely new economy.

The most dangerous aspect isn't the money they're stealing, it's the systematic erosion of trust in digital identity verification. When criminals can manufacture identities that pass institutional scrutiny for years, every application becomes suspect, and every customer becomes a potential phantom.

Stay sharp. Trust slowly. Verify everything.

Got a Second? The Dead Drop reaches 4,500+ readers every week including security professionals, executives, and anyone serious about understanding systemic wealth transfers. Know someone who needs this intelligence? Forward this newsletter.

Your Secure Voice AI Deployment Playbook

Meet HIPAA, GDPR, and SOC 2 standards

Route calls securely across 100+ locations

Launch enterprise-grade agents in just weeks

The Fraudfather's take on the week's biggest scams, schemes, and financial felonies, with the insider perspective that cuts through the noise.

The Criminal CRM Revolution: How $5,000 Bought the Underworld Fortune 500 Marketing Tools

Many of us understand that criminals evolve faster than most security teams can adapt. I've seen them pivot from telephone scams to email phishing, from crude malware to sophisticated social engineering. But what I have been investigating over the last couple of weeks fundamentally changes the game.

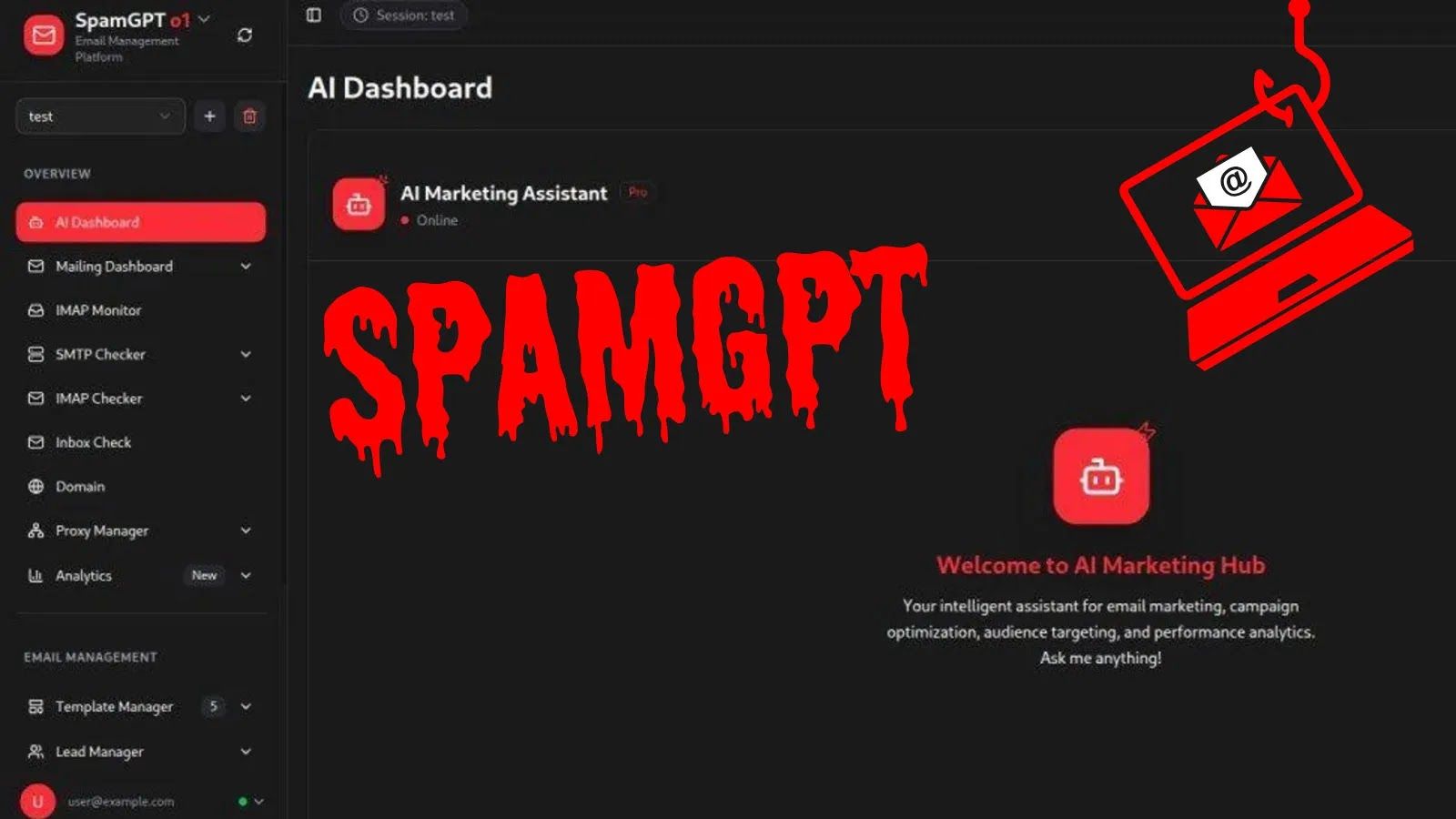

Meet SpamGPT, a $5,000 criminal toolkit that just democratized Fortune 500-level email marketing for the underworld.

Operational Reality: The Criminal CRM Revolution

During my years working financial crimes, we used to joke that most fraudsters couldn't organize a proper database if their freedom depended on it. Those days are over.

SpamGPT’s Intuitive Dashboard Can Serve the Most Basic Threat Actor

SpamGPT combines the power of generative AI with a full suite of email campaign tools, lowering the barrier for launching spam and phishing attacks at scale. What's most troubling? It mirrors legitimate marketing CRMs but is repurposed to facilitate phishing, ransomware, or other malicious payloads.

SpamGPT Tracks Open Rates and Marketing “Analytics”

I'm talking about a dark-themed dashboard that would make any marketing director envious: campaign management modules, SMTP/IMAP configuration, deliverability testing, real-time analytics, and an AI assistant called "KaliGPT" that generates convincing phishing content on demand. This empowers even low-skilled actors to access the infrastructure needed for large-scale attacks.

SpamGPT Offers Full Campaign Command and Control (C2)

The numbers tell the story of our new reality: Almost 1.2% of all emails sent are malicious, amounting to approximately 3.4 billion phishing emails each day. More alarming, the total volume of phishing attacks has skyrocketed by 4,151% since the advent of ChatGPT in 2022. That's not a typo… we're seeing a forty-fold increase in volume since AI became accessible.

The Criminal Playbook: Professional-Grade Infrastructure

Here's what keeps me awake at night: SpamGPT isn't just about sending bulk emails. The platform promises guaranteed inbox delivery for popular email providers (Gmail, Outlook, Yahoo, Microsoft 365, etc.), implying that it has been fine-tuned to bypass their email filters.

The operational sophistication is staggering:

Infrastructure Management: Attackers can bulk import SMTP accounts and the tool even provides a bulk SMTP & IMAP checker utility to validate that credentials work and are not blocked. They're rotating dozens of compromised servers automatically.

Deliverability Testing: SpamGPT automates inbox placement tests: an inbox check module can send test emails to designated IMAP accounts and then automatically check those inboxes to see if the messages arrived successfully. If a test email hits the spam folder, they adjust content or switch servers before launching the full campaign.

AI-Generated Content: The integrated KaliGPT assistant doesn't just create emails, it crafts psychologically optimized content based on targeting data. Attackers no longer need to write convincing phishing emails; they can ask the AI for persuasive scam templates, subject lines, or targeting advice within the spam toolkit.

Spoofing Capabilities: The platform facilitates advanced spoofing techniques, allowing attackers to customize email headers and impersonate trusted brands or domains. They're not just sending fake emails; they're engineering them to pass authentication checks.

Target Analysis: Who's in the Crosshairs

The targeting has become surgical. Senior executives are 23% more likely to fall victim to AI-driven, personalized attacks. Why? Their busy schedules and the trust they place in authority figures make them prime targets.

Industry breakdown shows the financial sector leading at 46% of cyberattacks involving phishing in 2021, followed by healthcare where phishing attacks led to $10 million in recovery costs per ransomware incident in 2025. Small businesses face particularly brutal odds: an employee of a small business with less than 100 employees will experience 350% more phishing and other social engineering attacks than an employee of larger enterprises.

Field Manual: Advanced Defensive Protocols

The traditional email security approach is failing catastrophically. There was a 104.5% increase in the number of malicious emails bypassing Secure Email Gateways (SEGs). We need new tactics.

Phase 1: Immediate Hardening

Enforce strict DMARC policies with "reject" action (not "quarantine")

Deploy AI-powered email security solutions specifically designed to detect AI-generated content

Implement zero-trust email verification for all financial transactions

Establish out-of-band verification for any email requesting sensitive actions

Phase 2: Human Factor Reinforcement Remember: In 74% of breaches, human factors played a role, encompassing social engineering tactics, mistakes, or misuse. Traditional awareness training isn't enough when criminals have AI assistants crafting psychologically optimized content.

Simulate AI-generated phishing scenarios in security training

Establish mandatory cooling-off periods for urgent financial requests

Create verification protocols that can't be bypassed by urgency tactics

Train employees to recognize AI-generated linguistic patterns

Phase 3: The Passkey Defense

Here's where we turn the tables: passkeys represent our best defense against this new threat landscape. Unlike passwords or even traditional 2FA, passkeys are phishing-resistant due to their origin binding, leverage of public key cryptography and elimination of shared secrets.

The technical reality is elegant: passkeys are bound to a website or app's identity, they're resistant to phishing attacks. The browser and operating system ensure that a passkey can only be used with the website or app that created them. Even if criminals perfect their spoofing techniques, they can't steal what doesn't exist, your private key never leaves your device.

Since the private key never leaves your device and cannot be intercepted or phished, this method eliminates the risk of phishing attacks. SpamGPT's sophisticated spoofing becomes irrelevant when the authentication method is cryptographically bound to the legitimate domain.

Strategic Implementation: The Passkey Rollout

For organizations serious about defense, the transition plan is clear:

Immediate Actions:

Pilot passkeys for privileged accounts and high-value targets

Implement passkey-required policies for financial transactions

Begin user education on the new authentication experience

Medium-term Objectives:

Expand passkey deployment to all sensitive applications

Eliminate SMS-based 2FA wherever possible

Establish passkey-only zones for critical operations

Long-term Vision:

Achieve comprehensive phishing resistance across all systems

Eliminate shared secrets from authentication workflows

Create authentication systems that criminals simply cannot compromise

The economic case is compelling: the average cost of a phishing breach in 2024 was $4.88 million, up 9.7% from 2023. Compare this to passkey implementation costs, and the ROI becomes obvious.

The Fraudfather Bottom Line

SpamGPT isn't just another criminal tool, it's a paradigm shift that demands an equally revolutionary response. The days of playing defense with traditional email security are over. Criminals now have AI assistants, professional-grade infrastructure, and guaranteed delivery systems.

But here's what they don't have: the ability to steal cryptographic keys that never leave your device. They can't phish what you never type. They can't intercept what never transmits. They can't steal what doesn't exist in a stealable form.

The path forward requires abandoning the mindset that better email filters will save us. Instead, we must eliminate the attack vector entirely through phishing-resistant authentication.

Quick Reference: Emergency Protocols

✓ Immediate Actions:

Implement strict DMARC "reject" policies

Deploy AI-aware email security solutions

Establish out-of-band verification for financial requests

Begin passkey pilot programs for privileged accounts

✗ What Doesn't Work:

Relying solely on traditional email filters

SMS-based 2FA for sensitive operations

Email-based password resets for critical accounts

User awareness training without technical controls

Red Flag Indicators:

Emails requesting urgent financial actions

Unexpected password reset requests

Communications bypassing normal approval workflows

Requests for authentication information via email

The criminals have industrialized. It's time we respond in kind.

The Fraudfather combines a unique blend of experiences as a former Senior Special Agent, Supervisory Intelligence Operations Officer, and now a recovering Digital Identity & Cybersecurity Executive, He has dedicated his professional career to understanding and countering financial and digital threats.

This newsletter is for informational purposes only and promotes ethical and legal practices.